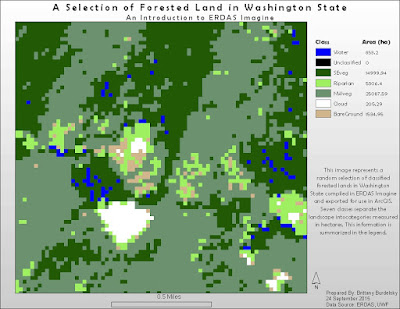

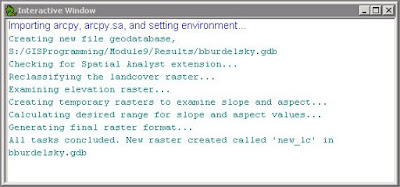

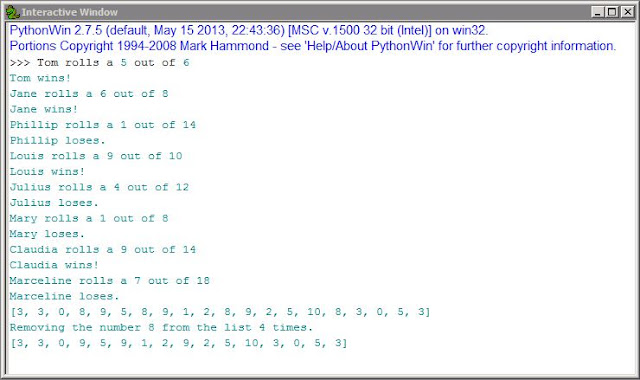

The general method is to import an image, create AOI features surrounding training sites (using the region growing tools and drawing tools), record the signatures from these AOIs in the Signature Editor, perform the supervised classification (via the Raster tab), and then recode this information to represent final class designations. As a result of running the supervised classification, a distance image is also created. This image along with examining comparative class histograms and mean plots (options in the Signature Editor window) examine the accuracy of the classification.

My map below shows Germantown, Maryland classified via a supervised classification. The Euclidean distance image is included as a reference to the degree of error. (The brighter spots indicate areas that are likely classified incorrectly.) Eight classes are ultimately used to represent the different land uses/land classes. Areas are also included and noted in the legend. The original band combination used is 5, 4, 6 as I felt these bands had the best separability between spectral signatures. As an aside, one of the more difficult things was creating training sites for roads. The borders between roads and everything else for in this image were quite pixelated. As a result, there is a fair amount of error in that class since my training sites may have included the occasional pixel belonging to a different class. When I was growing areas of interest for some of the other classes I also set higher distances between pixels to capture some of the variation in that feature and as a result introduced error into certain classes (particularly the fallow and agriculture classes) and that is validated by the bright spots in the distance image inset.

|

| Map 1 An exercise in automated classification using a supervised classification technique |